TENSORFLOW

Contents

Installation

ANACONDA

The open-source Anaconda Distribution is the easiest way to perform Python/R data science and machine learning on Linux, Windows, and Mac OS X. With over 15 million users worldwide, it is the industry standard for developing, testing, and training on a single machine, enabling individual data scientists to:

- Quickly download 1,500+ Python/R data science packages

- Manage libraries, dependencies, and environments with Conda

- Develop and train machine learning and deep learning models with scikit-learn, TensorFlow, and Theano

- Analyze data with scalability and performance with Dask, NumPy, pandas, and Numba

- Visualize results with Matplotlib, Bokeh, Datashader, and Holoviews

Download : https://www.anaconda.com/distribution/

PYCHARM - IDE

For both Scientific and Web Python development. With HTML, JS, and SQL support.

Download : https://www.jetbrains.com/pycharm/download/#section=mac

TENSORFLOW

Download : https://www.tensorflow.org/install

or you can run in Docker container:

docker pull tensorflow/tensorflow # Download latest image

docker run -it -p 8888:8888 tensorflow/tensorflow # Start a Jupyter notebook serverNeuron Network

Hello World of AI

MNIST

Deep Learning

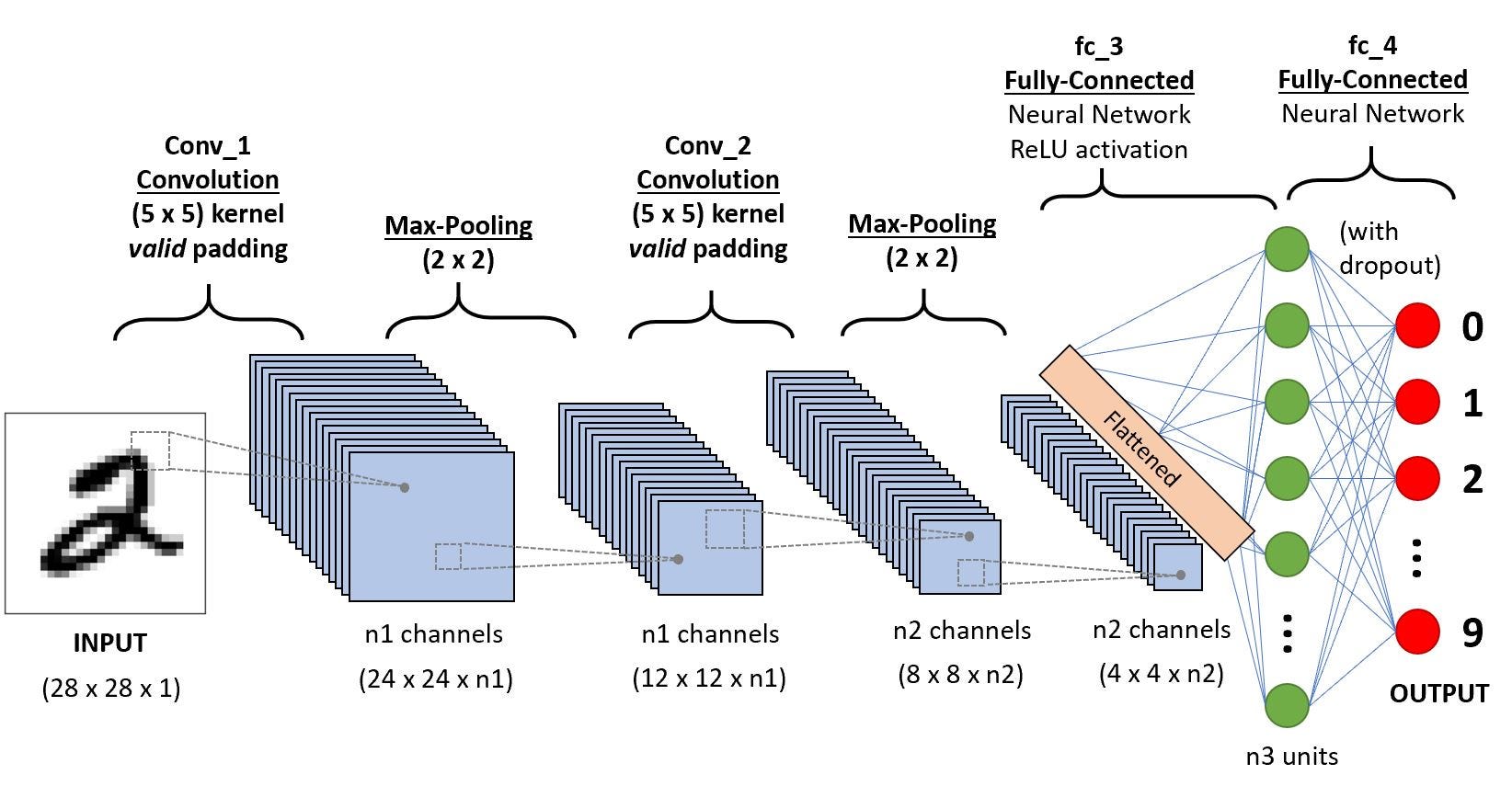

Layer Types

- Fully connected(dense) layers **: Fully connected layers connect every neuron in one layer to every neuron in another layer. It is in principle the same as the traditional multi-layer perceptron neural network (MLP). The flattened matrix goes through a fully connected layer to classify the images.

- Convolutional layers : Deep Learning algorithm which can take in an input image, assign importance (learnable weights and biases) to various aspects/objects in the image and be able to differentiate one from the other. The pre-processing required in a ConvNet is much lower as compared to other classification algorithms. While in primitive methods filters are hand-engineered, with enough training, ConvNets have the ability to learn these filters/characteristics.

- Pooling layers: Similar to the Convolutional Layer, the Pooling layer is responsible for reducing the spatial size of the Convolved Feature. This is to decrease the computational power required to process the data through dimensionality reduction. Furthermore, it is useful for extracting dominant features which are rotational and positional invariant, thus maintaining the process of effectively training of the model.

- Recurrent layers

- Normalization layers

- ..

Hidden layer simulation platform for Tensorflow : playground.tensorflow.org

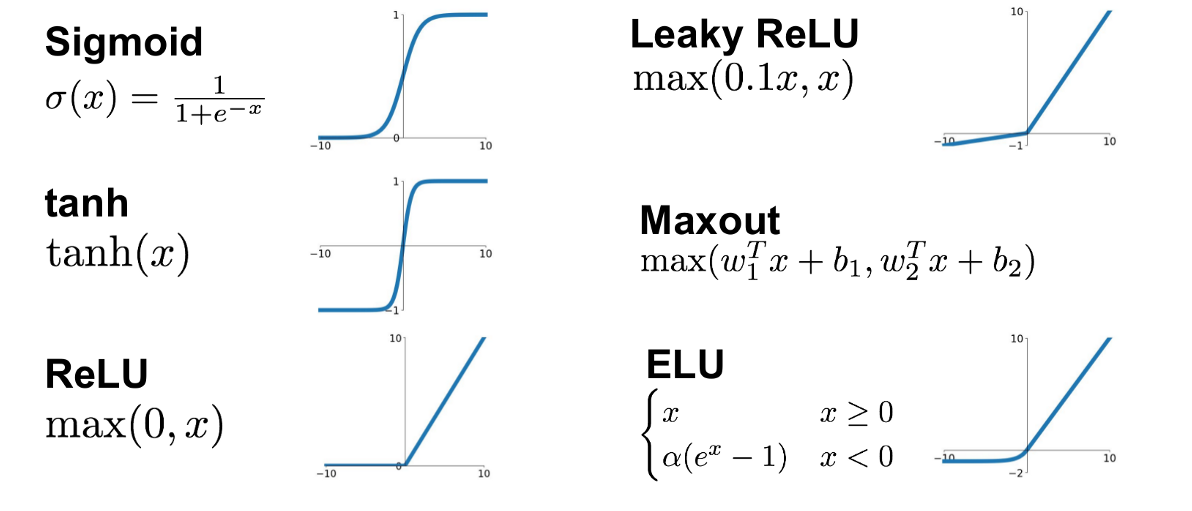

Activation Functions

Ideally, we would like to provide a set of training examples and let the computer adjust the weight and the bias in such a way that the errors produced in the output are minimized

Now let’s suppose we have some images of humans and others not containing images of humans. Now while the computer processes these images, we would like our neurons to adjust its weights and bias so that we have fewer and fewer images wrongly recognized. This requires that a small change in weights (and/or bias) causes only a small change in outputs.

Unfortunately, our neural network does not show this little-by-little behavior. A perceptron is either 0 or 1 and that is a big jump and it will not help it to learn. We need something different, smoother. We need a function that progressively changes from 0 to 1 with no discontinuity.

Sigmoid or Logistic Activation Function

tanh Activation Function

ReLU Activation Function

ELU

resource : https://towardsdatascience.com/complete-guide-of-activation-functions-34076e95d044